Individual Launches Legal Action Against ChatGPT for Accusation of Child Murder

ChatGPT Faces Backlash Over Defamatory Results - Again

In another scandal, ChatGPT is under fire for its inaccurate responses, this time falsely accusing a father of murdering his children. The Austrian non-profit privacy group None Of Your Business (noyb) has lodged a complaint with Norway's data protection authority, claiming that OpenAI knowingly allowed such defamatory results, in violation of the GDPR's data accuracy principle.

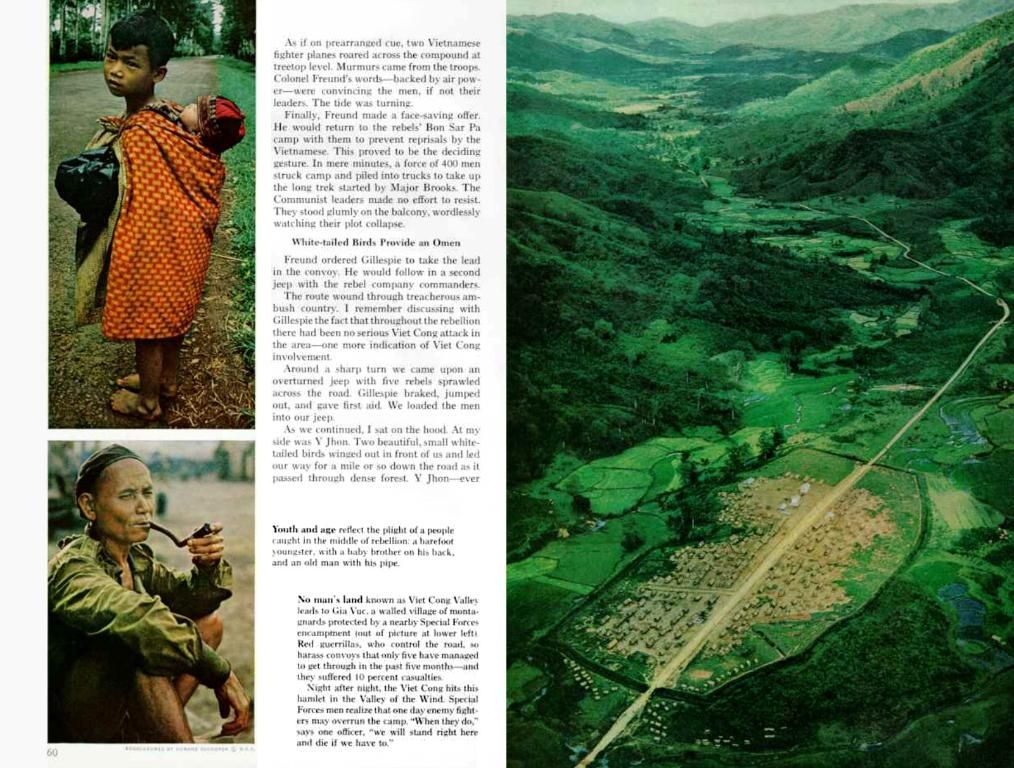

Arve Hjalmar Holmen, a concerned father, wanted to check if ChatGPT had any information about him. To his dismay, the AI's bio included real personal details like the number and gender of his children and the name of his hometown. However, it also painted him as a convicted murderer, claiming he had killed two of his children and attempted to kill the third, sentencing him to 21 years in prison.

Holmen expressed his concern, stating, "Some people might think 'there's smoke without fire.' The fact that someone could read this output and believe it's true is what frightens me the most."

Last year, noyb filed a complaint against OpenAI, asking it to correct the incorrect date of birth of a public figure due to its "hallucination" issue. However, according to noyb's data protection lawyer, Kleanthi Sardeli, OpenAI argued it could only block the data, allowing the false information to persist in the system.

Sardeli stated, "AI companies can't just 'hide' false information from users while internally processing false information. AI companies should stop acting as if the GDPR doesn't apply to them, when it clearly does." Reputational damage, she added, can easily occur if hallucinations aren't stopped.

noyb has asked the Norwegian data protection authority toorder OpenAI to delete the output and improve its model to eliminate inaccurate results, without suggesting a method. It also demands a fine for OpenAI.

AI-induced hallucinations have led to defamation in the past, with instances like German journalist Martin Bernklau being described as a child molester, drug dealer, and an escapee from a psychiatric institution by Microsoft Copilot. Meanwhile, regional mayor Brian Hood was allegedly wrongly associated with a bribery scandal by ChatGPT, and Georgia resident Mark Walters claimed ChatGPT falsely implicated him in embezzling money from a gun rights group.

Joakim Söderberg, another data protection lawyer at noyb, asserted, "The GDPR is clear – personal data must be accurate. Showing ChatGPT users a small disclaimer that the chatbot can make mistakes is not sufficient. You can't just spread false information."

OpenAI states it is making efforts to reduce hallucinations through safety systems, usage policies, and filtering, and improving the way its models respond to real people and known individuals. However, it's essential to note that the reported incidents stem from an earlier version of ChatGPT without online search capabilities, which allegedly improved accuracy after the complaints.

Hallucinations, it seems, are a persistent problem for generative AI, with potential consequences that go beyond simple inaccuracies. As these scandals underscore, the importance of data accuracy in AI outputs, especially under GDPR, can't be overstated.

- Arve Hjalmar Holmen, who is the subject of a recent GDPR complaint by the Austrian non-profit privacy group None Of Your Business (noyb), had been wrongly accused by ChatGPT of murdering his children, which he finds particularly concerning.

- In a statement, data protection lawyer Kleanthi Sardeli from noyb criticized OpenAI for arguing that it could only block false information without deleting it, asserting that AI companies should stop treating the GDPR's data accuracy principle as optional.

- Joakim Söderberg, another data protection lawyer at noyb, reiterated that the GDPR requires personal data to be accurate, and merely providing a disclaimer that ChatGPT might make mistakes is insufficient to avoid spreading false information.